The Future of Caching in .NET is “Velocity”…

Caching has always been a way to achieve better performance by bringing data closer to where it is consumed thus avoiding what can be a bottleneck to its original data source (usually the database). Due to the architecture of the Internet itself, caching often takes place outside of the Enterprise. This caching can happen in a user’s browser, on proxy servers, or on Content Distribution Networks (CDNs), etc. This type of caching is great because results are served up without even entering the infrastructure of the enterprise that hosts the application.

Once a request makes it into the infrastructure of the Enterprise, it is up to us as Enterprise developers and Architects to efficiently handle these requests and the associated data in ways that yield good performance that will scale as to not overload the resources of the Enterprise. To make this possible, there are various caching techniques that can be employed.

We are starting to see a trend towards applications that are becoming more data and state driven, especially as we are just beginning the journey into cloud-based computing. At this year’s PDC, Microsoft has shown many new and enhanced technologies that are more and more data and state driven. These include: Oslo, Azure, Workflow Foundation, “Dublin”, etc. To enable the massive scale that these technologies will help to provide, caching will become extremely important and will probably be thought of as a new tier in the application architectures of the future. Caching will be crucial to achieve the scale and performance that users will demand.

Common Caching Scenarios

When thinking about the data in the Enterprise we begin to uncover some very different scenarios that could benefit from caching. We also quickly realize that caching is not a “one size fits all” proposition because a solution that makes sense in one scenario may not make sense in another. To get a better understanding of this, lets talk more about the three basic scenarios that caching tends to fall into:

Reference Oriented Data

Reference data is typically written infrequently but frequently read. This type of data is an ideal candidate for caching. Most of the time, when we think about caching data, this is the type of data that first comes to mind. Examples of this could include: A product catalog, a schedule of flights, etc. Getting this type of data closer to where it is consumed can have huge performance benefits. It also doesn’t overload database resources with queries that are generating similar results.

Activity Oriented Data

Activity data is written and read by only one user as a result of a specific activity and is no longer needed when the activity is completed. While this type of data is not what we typically think of as a good candidate for caching, it can yield benefits of scalability if we do find an effective caching strategy. An example of this type of data would be for a shopping cart. An appropriate, distributed caching strategy will allow better overall scale for an application because requests can be served easily by load balanced servers that do not require sticky sessions. To handle this, the caching strategy must be able to handle many of these exclusive collections of data in a distributed way.

Resource Oriented Data

The trickiest of all is what’s know as Resource data. Resource data is typically read and written very frequently by many users simultaneously. Because of the volatility of this data it is not often thought of as a candidate for caching but can yield big benefits in both performance and scale if an efficient strategy can be found. An example of this type of data would include: The inventory for an online bookstore, the seats on a flight, the bid data for an online auction, etc. In all of these examples, although the data is very volatile in a high throughput situation it would be very slow if every request needed to result in a database access. The challenge for caching this type of data is having a strategy that can be distributed in order to achieve the ability to properly scale along with the necessary concurrency and replication so that the underlying data is consistent across machines.

Current Caching Technologies in .NET

There are existing .NET technologies that can be used to provide caching today. Some of these are tied to the web tier (e.g. ASP.NET Cache, ASP.NET Session and ASP.NET Application Cache) while some are more generic in their usage (e.g. Enterprise Library’s Caching Application Block). These are all great caching technologies for smaller applications but they have limitations that prevent them from being used for large Internet scale applications.

When it comes to larger Internet scale application caching technologies there are some 3rd party products in the space that do an excellent job, one of these being NCache by Alachisoft. Microsoft is now also jumping into this space with a new caching technology codenamed “Velocity.” Velocity can be configured to handle all of the caching scenarios described above in a performant and and highly scalable way.

What is “Velocity?”

“Velocity” is Microsoft’s new distributed caching technology. Although it is not scheduled to be released until middle of 2009, it already has many impressive caching features with lots of very useful and ambitious features slated for future releases. In Microsoft’s own words from the “Velocity” project website, they define “Velocity” in the following way:

Microsoft project code named “Velocity” provides a highly scalable in-memory application cache for all kinds of data. By using cache, application performance can improve significantly by avoiding unnecessary calls to the data source. Distributed cache enables your application to match increasing demand with increasing throughput using a cache cluster that automatically manages the complexities of load balancing. When you use “Velocity,” you can retrieve data by using keys or other identifiers, named “tags.” “Velocity” supports optimistic and pessimistic concurrency models, high availability, and a variety of cache configurations. “Velocity” includes an ASP.NET session provider object that enables you to store ASP.NET session objects in the distributed cache without having to write to databases, which increases the performance and scalability of ASP.NET applications.

In a nutshell, Velocity allows a cache to be distributed across servers which has huge scalability benefits. It also allows for pessimistic and optimistic concurrency options. This along with other features is what makes “Velocity” a great choice for the caching needs of large scale applications.

Features in Velocity

There are many features both existing and planned that will make Velocity an compelling caching technology that far exceeds the existing caching options offered by Microsoft.

Current Features:

Simple Caching Access Patterns Get/Add/Put/Remove

It is very easy using Velocity’s API to perform the standard cache access including Adding new items to the cache, getting items from the cache, putting updates to cache items and removing items from the cache.

Tag Searching

When saving a cache item to a specific cache “region” (regions are discussed later in this post) you are able to add one or more string tags to that entry. It is then possible to query the cache for entries that contain one or more tags.

Distributed Across Machines

A cache can be configured to exist across machines. The configuration options for this are quite extensive. By allowing cache items to be distributed across machines the cache is allowed to scale out as new cache servers are added. The other existing caching technologies offered by Microsoft only allow for scaling up. Scaling up can be very expensive as the caching needs increase and often hit a limit as the the amount of caching that can be allowed. With “Velocity” you can scale out a cache across hundreds of machines if needed effectively fusing the memory of these machines together to form one giant cache, all with low cost commodity hardware.

High Availability

Velocity can be configured to transparently store multiple copies of each cache item when it is stored in the cache. This provides high availability by helping to guarantee that a given cache item will still be accessible even in the event that a caching server fails. Of course the more backup copies of an item that you configure the greater the guarantee that your data will survive a failure. Velocity is also smart enough to make sure that each backup copy of a cache item exists on separate cache servers.

Concurrency

Velocity supports both Optimistic and Pessimistic concurrency models when updating an item in the cache. With Pessimistic concurrency, you would request a lock when retrieving an item from the cache that you were going to update. Then you would be required to unlock the object after it is updated. In the meantime, no one else would be able to obtain a lock for that item until it was either unlocked or the lock expires. With Optimistic concurrency, no lock is needed, instead the original version information is passed along when an item is updated. During the update, Velocity will check to see if the version that is being updated on the caching server is the same version that was edited. An error is passed back to the caller if the versions do not match. For performance reasons it is always better to use optimistic concurrency if the situation can tolerate it.

Management and Monitoring

Velocity is managed using PowerShell. There are over 130 functions that can be performed through PowerShell. For example: you can create caches, set configuration info, start a cache server, stop a cache server, etc.

ASP.NET Integration

Velocity comes with a Session State provider that can be “plugged into” ASP.NET so that the Session State information is stored inside Velocity as opposed to the standard ASP.NET session provider. Using the Velocity provider is completely transparent, the session object is used just as it was with the ASP.NET session provider. This automatically scales session state across servers in a way that does not require sticky routers.

Local Cache Option

Velocity can be configured such that when an item is retrieved from the distributed cache that it can also be cached locally on the server where it was retrieved. This makes it faster to retrieve the same object if it is asked for again. The real savings here is in network latency and the time it would take for de-serializing the cache item. Cache Items stored inside the Velocity cache are always serialized in memory but cache items stored in the local cache (when configured) are always stored natively as objects. So, if the memory space can be afforded, this can be quite a performance boost.

Can add Caching Servers at Runtime

Several times during the sessions on Velocity at the PDC, the presenter would add or remove a caching server at runtime. When this was done, the cluster of Velocity caching servers would react immediately and start to redistribute cache items across the cluster. This intelligence was very impressive and is the same smarts that is able to react if a caching server has a hardware failure, again redistributing cached items across the cluster. With this ability it is possible to dynamically add more caching power at runtime without losing any cached data because of a system restart.

Future Features

Although no indication was given as to when the additional features described below would make it into the Velocity framework it was very encouraging to hear about the many features that were in the queue for a future release. Here are some of the ones mentioned at the PDC:

Security

In future releases of Velocity, security will need to be a greater consideration. Currently, information stored inside the Velocity cache is not secured in anyway. Having access to the cache means that you have access to anything stored in the cache. In future versions of Velocity there will be security options that will allow you to secure items in the cache using several different techniques. The future planned security options are:

- Token-based Security – when storing items in the cache you will be able to specify a security token along with that item. That security token will need to be presented in order to retrieve the item from the cache.

- App ID-based Security – This option will allow you to register a domain account with a named cache. This way only specific users will be able to access a specified cache. Note: “Named caches” are discussed later in this post.

- Transport Level Security – This option will allow the standard transport level security offered by the various WCF bindings.

Cache Event Notifications

In the future, when anything happens that affects the cache, a notification will be sent across the cache cluster and to any other subscribed listeners. These events, when implemented, will allow a view into all of the actions taken on the cache. This will include notifications sent when items are added, updated and removed form the cache.

Write Behind

In many scenarios, it is the cache itself that we wish to front the data access to our system. This can provide very high performance and throughput. In order to make this as efficient as possible it would be great if it was possible to write to the cache and have the cache write the data to its backend data store. This is what is referred to as “Write Behind”. The actual writing to the backend happens asynchronously so that the caller does not have to wait for this write to happen and only has to wait for the item to be written to the cache memory. This is possible and safe because of the high availability features offered in Velocity. Because the cache data can be backed up inside the cache, there is little risk that a machine failure will prevent the data from being written to the backend data store.

Read Through

A future release of Velocity will also provide a “Read Through” feature. Read Through allows the cache to fetch an item if it doesn’t currently hold the item within the cache. This is both a convenience and a performance enhancement because multiple calls are not needed to retrieve an item from the cache when it is not already there. This again would allow the cache itself to be the data access tier with the cache itself handling the communications with the backend data source.

Bulk Access

Future versions of Velocity will provide access methods that will allow bulk operations to be performed on the cache. This again can enhance performance in some scenarios simply by removing chatty calls to the cache when larger chunkier calls could be made.

LINQ support

Being able to query the cache using LINQ will open up many very interesting scenarios. Having LINQ support will truly transform caching into its own robust tier in the overall architecture in the Enterprise.

HPC Integration

Other future features talked about with regards to Velocity involve High Performance Computing (HPC). Up to this point, caching was all about placing data as close as possible to where it is consumed. When we begin to think about HPC scenarios, we are actually viewing the problem from the opposite point of view, that is, we are trying to put the processing as close to the data as possible. In the PDC sessions, they mentioned that the Velocity team is interested in exploring ways to move calculations and processing close to the items inside the cache.

Cloud

With the announcement of Windows Azure and other cloud based initiatives, all major technologies at Microsoft are trying to figure out how they can fit into this new paradigm of computing. It is not clear exactly how Velocity will take part in the cloud but I would imagine that at some point Velocity caching will be available to applications hosted in the Window’s Azure cloud.

Velocity’s Architecture

Physical Model

Velocity caching is architected to run on one or more machines, each using a cache host service. Although you can run more than one cache host service on a single machine, it is generally not advised as you do not get the full protection that Velocity’s high availability feature has to offer when a machine failure occurs. Multiple cache host services are meant to be run together as part of a cache cluster. This cache cluster is really taken to be one large caching service. The services that are part of the cluster actually interact together and are configured as a unit to offer all of the redundancy and scale that Velocity has to offer. When the cluster is started, all of the cache service hosts that make up the cluster read its configuration information either from SQL server or a common XML configuration file that is stored on a network accessible file share. A few of the cache hosts are assigned the additional responsibility of being “Lead Hosts”. These special hosts track the availability of the other cache hosts and also perform the necessary load balancing tasks for the cache as a whole.

Logical Model

While the cache clusters and associated cache hosts make up the physical view of the Velocity cache, “Named Caches” are used to make up the logical view of the cache. Velocity can support multiple name caches which act as separate isolated caches within a Velocity cache cluster. Each Named Cache can span all of the machines in the cluster, storing its items across various machines that are configured to redundantly store items to achieve high availability which gives the named cache a tolerance for machine failure (since any item in the cache can live in multiple places on different machines).

Inside a given named cache, Velocity offers another optional level in which to cache objects called “Regions.” There can be multiple “Regions” for any given Named Cache. When saving cache items into a Region it is possible to add “Tags” to these items so that they can be searched and retrieved by more than a simple cache ID (which is how caches normally work). The tradeoff in using regions is that all of the items in a given region are stored in the same cache host. This is done because the cache items need to be indexed in order to provide the searching functionality that “Tags” provide. Even though all of the items in a region are stored on the same host, they can still be backed up onto other hosts in order to provide the high availability that Velocity offers. So, while Regions support a great tag based searching functionality, they do not provide the same distributed scalability that cache items have that do not use Regions.

Programming Model

So what does the code look like when getting and saving objects into the Velocity cache.

To access a Velocity cache, you must first create/obtain a cache factory. From the cache factory you can get an instance of the named cache you wish to use (in the example, we are getting the “music” named cache).

CacheFactory factory = new CacheFactory();

Cache music = factory.GetCache("music");

Next, I will put an item into the cache. In the example below, I am caching the “Abbey Road” CD using its ASIN number as the cache key.

music.Put("B000002UB3", new CD("Abbey Road", .,.));

To retrieve an item from the cache, simply call the cache’s “Get” method passing in the key to the cache item you wish to retrieve.

CD cd = (CD)music.Get("B000002UB3");

To create a region, simply call the “CreateRegion” method of the cache passing in the desired name for the region you wish to create. In the example below, I create a “Beatles” region:

music.CreateRegion("Beatles");

Below, I show an example where two items are being put into the same region. When using regions, you must always specify the region along with the key and object you wish to cache.

music.Put("Beatles", "B000002UAU", new CD( “Sgt. Pepper’s…”,.));

music.Put("Beatles", "B000002UB6", new CD( “Let It Be”,.));

Lastly, below, I show how to retrieve a cache item from a regions. Notice that the Region name must be specified along with the key.

CD cd = (CD)music.Get("Beatles", "B000002UAU");

How is High Availability Achieved?

As previously mentioned, Velocity has a feature that helps to promote high availability for the items in the cache. To gain this “High Availability” caches can be configured to to store multiple copies of an object when it is put into the cache. What Velocity does when doing this is ensure that a given item is stored in multiple cache hosts (which is why it is advisable to only run one cache host per physical server).

In order to achieve high availability without adversely impacting performance, when putting an item into the cache, Velocity will write the cache item to its primary location and to only one secondary location before returning to the caller. Then, after returning to the caller, Velocity will asynchronously write the cache item to other backup locations up to the number of backups configured for that cache. Doing this ensures that the object being cached exists in at least two places (which gives the minimum requirement for high availability) but doesn’t hold up the caller while fulfilling all of the configured backup requirements.

Since Regions are required to live entirely inside one cache host, to achieve “High Availability” Velocity backs up the entire Region to another host.

Release Schedule

Just before the PDC08, Velocity had released its CTP2 (Community Technical Preview 2). During the PDC they stated the release schedule for Velocity to be the following:

CPT3 would be released during the MIX09 conference (scheduled for Mid-March 09).

RTM release scheduled for Mid-2009.

Other Resources

If you would like to know more about Velocity, I would suggest you view the following presentations given at the PDC08:

Project “Velocity”: A First Look – presenter: Murali Krishnaprasad

Project “Velocity”: Under the Hood – presenter: Anil Nori

Also, there was a very informative interview on Scott Hanselman’s podcast “Hanselminutes”: Distributed Caching with Microsoft’s Velocity

I would also recommend the Velocity article on MSDN: “Microsoft Project Code Named Velocity CTP2” as well as the Velocity Team Blog on MSDN.

Managed Extensibility Framework (MEF) and other extensibility options in .NET…

In recent years the patterns and frameworks used to facilitate extensibility in applications and frameworks is getting more and more attention. The idea of extensibility becomes important if we are writing applications and frameworks that need to be flexible in order to have broad use. These applications often need to accommodate situations that are completely unknown at the time they are being written. Allowing for extensibility can make applications and frameworks more resilient and somewhat future proof.

There are several patterns and frameworks that are often used for extensibility. I will cover a few of these ending off with a discussion of the Managed Extensibility Framework that will be built into .NET 4.0 (the next release of .NET yet to be released).

Inversion of Control/Dependency Injection

One such extension pattern that has become popular over the last few years is the idea of “Inversion of Control” and “Dependency Injection.” The “Inversion of Control” pattern inverts the more traditional software development practice where objects and there lifetimes are created directly by the code that uses them. Using the Inversion of Control pattern, object creation and lifetime are controlled and configured at the upper rather then the lower levels of the application usually through what’s known as an Inversion of Control container (IOC container). Once configured, the IOC container can be used to create objects with a specified interface. When using IOC containers, it is important that object dependencies inside your application are done using interfaces rather than specifying the actual classes. IOC Containers facilitate extensibility because the actual classes used in lower levels of your application can be changed down the road without needing to edit these class libraries, making your code flexible and resilient.

There are several very popular IOC containers that are available for use in your code. Many of these containers offer lots of robust features that allow you to control the lifetime of the created objects as well as being able to perform Dependency Injection (DI). Using Dependency Injection an IOC container can automatically set properties and constructor parameters of a created object when the object is being built up by the IOC container. Very powerful stuff.

The following are some of the more popular IOC containers available today:

- Unity : This is an IOC container developed by Microsoft’s Patterns and Practices group built on top of Enterprise Library’s Object Builder.

- Castle Windsor : This was one of the original IOC container implementations and is very sophisticated in what it can do and how it can be configured.

- Structure Map : This IOC container implementation created by “Alt.net” blogger Jeremy Miller to help facilitate software development best practices including test driven development. This IOC implementation introduced an innovative “fluent” configuration interface that makes it easy to configure without using XML.

- Ninject : This is another innovative IOC/Dependency Injection container written by Nate Kohari that also uses an XML-free fluent interface to configure.

Managed Add-in Framework (MAF)

Another extensibility option offered by Microsoft is the Managed Add-in Framework (MAF). This was introduced in the System.AddIn namespace in .NET 3.5. This framework is a great way to allow 3rd parties to write plugins for your application.

The interesting thing about this framework is that plugins can be configured to run inside their own app domain. This is great because it prevents 3rd party plugins from crashing your application. It also can allow “sandboxing” of a 3rd party add-in so it can be more tightly secured.

Another interesting feature of the this framework is that it provides a way to allow plugins to be fully forward and backward compatible with different versions of a host application. This is possible because there is actually an isolation layer that exists between the application and its plugins. This isolation is achieved using the add-in pipeline (a.k.a. the communication pipeline). It is this pipeline that provides the support for the versioning and isolation provided by this framework.

In simple terms, a contract is defined for the add-in that is implemented in both the host application and the add-in itself. When developing an add in, the Host and Add-in side adapters and views are code generated into four different assemblies using a “Pipeline Builder” which is a Visual Studio Add-in that can be found here. These generated parts do most of the heavily lifting needed to do things like crossing app domains and handling add-in activation.

The “Host View of the add-in” and the “Add-in View” are both generated abstract classes that contain separate views of the object model that both the host and the add-in share. The side adaptor classes are used to adapt methods to and from the contract. The contract, which is an interface inherited from IContract, is the only type that is loaded with both the host and the add-in.

Addins created with this framework can be shared across different applications as long as they share the same contract. This makes these add-ins very versatile.

As I mentioned above, another huge benefit of MAF is the ability to have forward and backward compatibility when using addins. Below shows a diagram of a host application that has been updated from version 1 to version 2 and how it can consume addins built for the new version (V2) as well as the older version (V1).

To make this possible, the V1 addin just needed a new Addin side adaptor to adapt the V2 contract to the V1 addin.

As you can see, MAF is a pretty powerful plug-in technology. The main down side that I can see are the number of assemblies that need to be created for a plugin (although, they are generated).

Here are some interesting articles on MAF, if you’d like to know more:

- MSDN : CLR Inside Out – .NET Application Extensibility – Part 1

- MSDN : CLR Inside Out – .NET Application Extensibility – Part 2

Managed Extensibility Framework (MEF)

I learned about a new extensibility framework at the PDC08 called the Managed Extensibility Framework (MEF). Since I have started following conversations and blog posts on MEF, I have seen lots of talk about how it compares to other extensibility options like the ones described in this blog post (i.e. IOC Containers and MAF). The MEF team is quick to point out that although MEF has a lot in common with these that it is not being designed as a replacement for IOC containers or MAF.

In the words of Krzysztof Cwalina, a program manager on the .NET Framework team:

MEF is a set of features referred in the academic community and in the industry as a Naming and Activation Service (returns an object given a “name”), Dependency Injection (DI) framework, and a Structural Type System (duck typing). These technologies (and other like System.AddIn) together are intended to enable the world of what we call Open and Dynamic Applications, i.e. make it easier and cheaper to build extensible applications and extensions.

MEF takes the idea of extensibility many steps forward in that it allows for applications to be created from composable parts. Unlike MAF which can connect a host application to an addin that implements a specific interface, MEF doesn’t really distinguish between host and addin, instead it allows interfaces to be designated as either Import or Export then uses MEF’s ComposibleContainer (which is similar to an IOC container) to hookup the imports and exports at runtime as objects are requested from the ComposibleContainer. This takes the IOC concept further because MEF actually manages the dependencies between parts.

To break down MEF into its core building blocks, MEF consists of a “Catalog” that holds meta-data regarding “Parts” that can be used to Compose an application. The “Parts” are objects that can expose their behavior to other parts (which is referred to as an Export) or the Parts can consume the behavior of other parts (which is referred to as an Import). Parts can even export their behavior and at the same time import the behavior of another part. These parts are composed at runtime using all of the import and export meta-data collected by a ComposibleContainer.

So as you can see, MEF is really all about composition rather then a pure plugin architecture because the lines between host and extension are blurred. In fact the host application can expose its functionality to the extensions as well as the extensions exposing functionality that can be used by the host application. The other interesting thing is that the extensions themselves can be composed from each other where a given extension can depend on functionality offered by another extension in a very loosely coupled way. Managing all of these dependencies is something that MEF does exceptionally well.

In Glenn Block’s session, "A Lap Around the Managed Extensibility Framework", at the PDC08, he talked about this composition in terms of “Needs” and “Haves”. For example, a part A may say that “I have a toolbar” while another part B may say “I need a toolbar”. In this simple case, part A would “Export” its toolbar interface while part B “Imports” a toolbar interface. Even though part A and part B know nothing about each other, MEF’s ComposibleContainer can “hookup” these two parts to compose the total functionality at runtime.

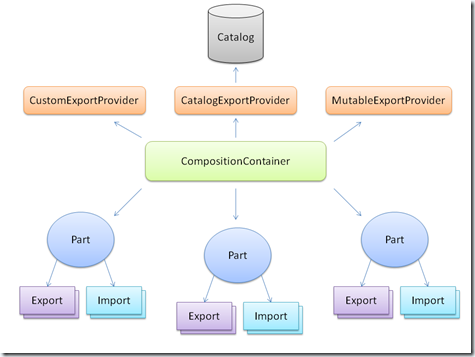

Above, we talked about the basic building blocks in MEF. Below is another diagram that expands this view just a bit more:

From this diagram you can see that a “Part” can contain have both “Imports” and “Exports”. As previously stated, it is the responsibility of the CompositionContainer to use the knowledge of the “Imports” and “Exports” to compose the application. It is also important to note that the parts themselves can be exposed to the CompositionContainer from a number of sources called Catalogs. There are many different types of catalogs, these include catalogs that can be hard coded with assemblies that contain parts, catalogs that can bring in parts that exist in assemblies stored in a specified directory or even custom catalogs that can get parts from a WCF service (to name a few).

In the examples I’ve seen of catalogs that pull from a directory there has also been a notification mechanism that would allow new parts to be included and composed at runtime using a file watcher. This allows very interesting scenarios where an application/framework can be extended even while it is running.

So how is all of this composition accomplished in the MEF framework. At first glance, it would appear that in order to properly determine what behavior a given class imports and/or exports, the CompositionContainer would need to load each class in order to determine its capabilities. This sounded very slow. But, instead, as I looked further, a given Part is decorated with attributes that are used to determine what “contract” a given class imports and exports. So it is this static meta data that is interrogated rather than having to load the individual classes. By building up this meta-data, makes it possible to statically verify the dependency graph for all of the parts in the container.

A contract in MEF is simply a string identifier rather then an actual .NET type. So, even though a contract is meant to identify a set of specific functionality, it is really just a name that is assigned to that functionality so that it can be identified. This allows the CompositionContainer to match Imports up Exports when it is composing the application. At runtime, it is assumed that an Export with the same contract as an Import will be able to be cast to the type of the Import at Runtime. If the actual .NET type of the named Export does not match the .NET type of the associated Import property, a casting exception is thrown.

These Import and Export attributes have three main constructors:

- A default constructor that takes no parameters. For this constructor, the contract name is defaulted to the fully qualified name of the type of the class, property or method it decorates.

- A constructor that accepts a .NET Type. For this constructor, the contract name is set to the fully qualified name of the passed in type.

- A constructor that accepts a string. For this constructor, the contract name is set to this passed in string. Although this is an option, in the current MEF implementation, the other two constructors are recommended over this one because using this constructor can lead to naming collisions if you are not careful.

MEF allows Import attributes to decorate properties. These properties can be for an object or for a delegate. While an Export attribute can decorate a class, a property or a method.

Previously, MEF supported what is known as “Duck-Typing”. This allowed MEF to compose an Import and Export together as long as the “shape” of the import and export interfaces were the same. The concept of “Duck-typing” is more prevalent in dynamic languages and it is basically the idea that if two interfaces look the same, that is, their properties and methods have the same names and use the same parameter types that they are equivalent even if their static types are not the same. In a nutshell, “duck-typing” basically says that if it looks like a duck and quacks like a duck it must be a duck.

This “Duck-Typing” was previously achieved in MEF by generating IL on the fly. In the current release of MEF, this duck-typing functionality has been removed. I’m told that removing the previous implementation of Duck-typing was a difficult decision that centered around the fact the implementation that was used would be difficult to maintain. While the Duck-typing was fairly straight forward to implement in simple cases where simple types were used as parameters of methods, etc. It could become somewhat unwieldy when dealing with complex types as parameter (especially if those complex types contained methods and properties using complex types, and so on and so on…).

From what I understand the MEF team may bring back similar duck-typing functionality in a future version of MEF either through the NOPIA work coming in .NET 4.0 or using other new dynamic functionality that will be a part of .NET 4.0, but would not commit to when or if this would happen. See my previous blog post, “The future of C#…”, for more information about the new dynamic features that are being added into the .NET 4.0 CLR.

OK…that’s interesting, but why would Duck-typing be important for future versions of MEF (in my opinion)? What advantages would it bring?

Many plugin-extensibility solutions require a third interface assembly that can be shared between two assemblies if they are to be composed at runtime. This requires that this interface assembly be available to the different teams that may be building these various plugin assemblies. This interface assembly can be a problem in cases where we wish to compose assemblies that were not necessarily built to be composed together. In these cases, it is very powerful to be able to match these interfaces in a looser way at runtime. This makes these composable solutions much more resilient to changes by not having to depend on actual static types. As you can see, this looser typing will be important and will be much anticipated in the future of MEF.

A example of MEF in code….

To allow a more concrete view of MEF, lets look at a simple code to give a better understanding of how MEF is actually used:

namespace MyNamespace

{

public interface ISomethingUseful

{

void UsefulMethod();

int UsefulProperty {get; set; }

}

[Export(typeof(ISomethingUseful))]

private class SomethingUseful : ISomethingUseful

{

void ISomethingUseful.UsefulMethod1();

int ISomethingUseful.UsefulProperty {get; set; }

}

private class Bar

{

[Import]

public ISomethingUseful SomethingUseful { get; set; }

}

public void Compose()

{

var catalog = new AttributedTypesPartCatalog(typeof(Foo), typeof(Bar));

_container = new CompositionContainer(catalog.CreateResolver());

_container.Compose();

}

}

The above code is very simple but shows how imports and exports are defined. The “Import” attribute doesn’t specify a contract so it uses the fully qualified name of the type that it decorates ( in this case “MyNamespace.ISomethingUseful” ). The catalog type used in this example simply specifies the classes that are to be composed. Then its “Resolver” class is put into the the container. The resolver is what creates the actual instances of the classes. Finally the call to the container’s Compose() method is where everything comes together and all of the classes are created and hooked up using the specified “Import” and “Export” directives.

This is just a very simple case where there exists a one to one cardinality between an “Import” and an “Export”. It is also possible to express multiple parts that export the same contract along with an import that accepts multiple parts with the same contract. An example of how these parts might be defined is as follows:

namespace MyNamespace

{

[Export(typeof(ISomethingUseful))]

private class AnotherSomethingUseful : ISomethingUseful

{

void ISomethingUseful.UsefulMethod1();

int ISomethingUseful.UsefulProperty {get; set; }

}

private class AnotherBar

{

[Import]

public IEnumerable<ISomethingUseful> UsefulSomethings { get; set; }

}

}

In the above code we see another class that exports “MyNamespace.ISomethingUseful” along with another class that imports one to many “IMyNamespace.SomethingUseful” parts by specifying the type as IEnumerable.

MEF is designed specifically to handle large applications and frameworks that have high extensibility requirements. One of the first Microsoft applications to take a dependency on MEF is Visual Studio. In Scott Guthrie’s keynote address at PDC08, he showed an example of where Visual Studio 2010 was using a MEF plugin to show an HTML view of C# code comments inside the actual code while it was being edited. Because of MEF, Visual Studio itself had no idea that it comments were being presented differently inside the editor because the comment viewer was a MEF extension point within Visual Studio.

I hope that I have been able to give you a feel for what MEF is, although I’ve only just touched on what it can do. I think that this framework will open up many interesting opportunities to support very rich extensibility scenarios not possible with other extensibility patterns and frameworks.

If you are interested in learning more about MEF, I would suggest you watch the session on it that was given at the PDC:

Managed Extensibility Framework: Overview – presenter: Glenn Block

The following screencast is also very good because it shows some simple coding examples using MEF:

DNRTV Show #130: Glenn Block on MEF, the Managed Extensibility Framework

Here are some other links that are also worth reading:

The Future of C# … 4.0 and beyond …

As a language, C# has had amazing growth over the years and has become the language of choice for many .NET developers. The language itself is really not that old yet it has taken hold as one of the more popular programming languages used around the world.

The evolution of C#

After the initial success of the Java programming language, Microsoft decided to create its own programming language that that could be tailored to its Window’s operating system and more importantly its emerging .NET framework.

This new language, originally called COOL (C-like Object Oriented Language) and later renamed C#, began its development in early 1999 by Anders Hejlsberg (the chief architect of Borland’s Delphi language) and his team and was publicly announced at the 2000 Microsoft PDC.

Since that time, C# has grown in leaps and bounds having a very interesting evolution. In brief, the various versions of the C# language have evolved in the following way:

- C# 1.0 – C# introduced as one of the original .NET Managed Code languages written for the CLR.

- C# 2.0 – This version started to lay the ground work for the functional features that would be in future releases. This is the version that brought us Generics. Unlike C++ Templates, Generics are first class citizens of the CLR.

- C# 3.0 – With this version we saw the C# take on features that were much more functional in nature. The big prize this version brings is LINQ (Language Integrated Query). LINQ basically builds a functional expression tree that gets lazily executed when its results are needed. LINQ required an amazing amount of CLR support and makes use of Lambda Expressions which are a functional programming construct.

The Next Release, C# 4.0 – The dynamic C#

While C# 3.0 introduced functional programming concepts into the language, the primary purpose of C# 4.0 is to introduce dynamic programming concepts into the language. Dynamic programming languages have been around for quite some time including the very popular Javascript language. In the past couple of years there have been a bunch of other dynamic languages gaining a significant amount of popularity and interest. Among these are Ruby, whose popularity can be attributed to the popular web platform “Ruby on Rails”, and Python, which is Google’s language of choice for their cloud-based offering.

Microsoft already has a couple of .NET-based dynamic programming languages, Iron-Ruby and Iron-Python, built on top of the DLR (Dynamic Language Runtime). The DLR is built on top of the CLR and is what makes dynamic languages possible on the .NET platform. In C# 4.0, the DLR is being leveraged to provide the new dynamic features offered in C# as well as being able to bind to other .NET dynamic languages and technologies, including: Iron-Ruby, Iron-Python, Javascript, Office and COM. All this is made possible by the following new new features in C# 4.0:

- Dynamically Typed Objects

- Optional and Named Parameters

- Improved COM Interoperability

- Co- and Contra-variance.

Dynamically Typed Objects

Currently in C#, it is fairly difficult to talk to other dynamic languages such as Javascript. It was also very difficult to talk to COM objects. Each of these had its own unique way of doing this binding. To make this more consistent, C# 4.0 has introduced a new static type called “dynamic”. This sounds pretty funny but it is a way to allow a statically typed language like C# to dynamically bind to dynamic scriptable types in Javascript, Iron-Ruby, Iron-Python, COM, and Office, etc. at runtime. The dynamic type is essentially telling C# that any method call on a dynamic type is late bound. This is very similar to the idea of IDispatch in COM.

This “dynamic” type (which is also a keyword in .NET 4.0) is very similar to the “object" type because like “object”, anything can be assigned to a variable of type “dynamic”. In fact, internally to the CLR, the type “dynamic” is actually coded as a type “object” with an additional attribute that tells the CLR that it has dynamic semantics. So, objects typed as “dynamic” have the compile-time type “dynamic” but they also have an associated “run-time” type that is discovered at runtime. At runtime, the discovered “run-time” type is actually substituted for the dynamic type and it is the real static type that is operated on. This allows overloaded operators and methods to work with dynamic types.

The introduction of this new dynamic type is in response to realizing that there are many cases where we need to talk to things that are not statically typed and while this was previously possible it was rather painful. Some examples of this are:

- Talking to Javascript from Silverlight C# code

- Talking to an Iron-Ruby or Iron-Python assembly from a C# assembly

- Talking to COM from a C# assembly

Optional and Named Parameters

Optional method parameters is a feature that has been requested for a long time from C++ programmers that have moved to C#. Similar to C++, optional method parameters allow you to specify default values for parameters so that they do not need to be specified when calling that method. In C++, this was a great way to add new parameters to existing methods without breaking existing code. It also helps to reduce the number of methods that need to be created on a class for common scenarios where you don’t want to make the user of the class have to specify more parameters than are really needed.

To have optional parameters in a method call, you simply add an “= <value>” after the parameter that you want to have a default value. The only restriction is that the optional parameters have to be at the end of the method signature. For example:

public StreamReader OpenTextFile(string path, Encoding encoding = null, bool detectEncoding = true, int bufferSize = 1024);

The OpenTextFile method above specifies one required parameter (the path) and three optional parameters. This allows the method to be called by only specifying the path, for example:

StreamReader sr = OpenTextFile(@“C:Temptemp.txt”, Encoding.UTF8);

In this example, the detectEncoding and bufferSize will all be set to the default values specified in the method signature.

This is actually taken one step further by also allowing “Named Parameters”. What this does is allow you to specify parameters by name when calling a method. The only restriction is that named parameters need to be specified last when calling a method. For example, I could also call the above method this way:

StreamReader sr = OpenTextFile(@“C:Temptemp.txt”, Encoding.UTF8, bufferSize: 4096);

Using a named parameter in the example above allowed me to specify the bufferSize without having to specify the detectEncoding parameter. This does one better than what C++ had to offer.

In fact, you can specify all of the parameters by name in any order (although you must specify all non-optional parameters). For example, it is possible to call the above method this way:

StreamReader sr = OpenTextFile(bufferSize: 4096, encoding: Encoding.UTF8, path: @“C:Temptemp.txt”);

Improved COM Interoperability

The addition of Named Parameters and the new dynamic type makes COM interop much easier. Currenlty, calling COM automation interfaces (such as interfaces into Microsoft Office, Visual Studio, etc.) is very clumsy. Many of these interface methods have many parameters. When calling these you need to specify every one of them as a ref parameter whether you use them or not. This can lead to code that looks like the following:

object filename = “Test.docx”;

object missing = System.Reflection.Missing.Value;

doc.SaveAs(ref fileName, ref missing, ref missing, ref missing, ref missing, ref missing, ref missing, ref missing, ref missing, ref missing, ref missing, ref missing, ref missing);

In C# 4.0 this has been made much simpler. You no longer need to specify all of the missing arguments, this means the above call could be made more simply as:

doc.SaveAs(@”Test.docx”);

What makes the COM interop story better in C# 4.0 is that:

1. For COM only, type ‘object’ is automatically mapped to type ‘dynamic’.

2. You no longer need to specify every parameter as a ‘ref’ parameter (the compiler does this for you).

3. No primary interop assembly is needed. The compiler can now embed interop types so you do not need the primary interop assembly when you run the application.

4. Optional and named parameters allow you to specify only the parameters that are pertinant in the COM calls.

Co- and Contra-variance

This is a bit more difficult to explain, but I’ll do my best. In the current C# version, Arrays are said to be “co-variant” because a more derived type of array can be passed where a less derived type is expected. For example:

string[] strings = GetStringArray();

Process(strings);

where the process method is defined as:

void Process(object[] objects) {…}

The problem with this is that it is not what you would call “safely” co-variant, because if the “Process” method were to replace one of the strings in the array with an object of another type, it would generate a runtime exception (as opposed to a compile time exception).

Likewise, the idea of “contra-variance” means that something of a less derived type can be passed in places where a more derived type is declared.

In the existing C# implementation, Generic types are currently considered “In-variant” types because one generic type cannot be passed in place of another, even if it is more derived. For instance:

IEnumerable<strings> strings = GetStringList();

Process(strings);

where Process is now defined as:

void Process(IEnumerable<objects> objects) { …}

The above code will not compile because generic types are invariant and must be specified as the exact type.

In C# 4.0, this will be allowed because the compiler would recognize that IEnumerable<T> is a read only type and therefore would not be in danger of being modified at runtime. So, it will be said that IEnumerable<T> will be safely co-variant in C# 4.0. What makes this possible is that in C# 4.0, IEnumerable<T> is now defined using a new “out” decorator on the T generic type. So now in C# 4.0 it is defined as:

IEnumerable<out T>

The “out” keyword says that the type T will only be used as an out value and will not be modified. This will allow the C# compiler to know that this is safey co-variant. Likewise, using an “in” decorator will tell the compiler that the generic type is safely contra-variant. This allows all of the proper type checking to occur at compile time.

Beyond C# 4.0

Some of the interesting things that are planned for beyond C# 4.0 are features that will make it easier to do code generation and meta-programming (which are things that help contribute to the popularity of such platforms as Ruby on Rails). The idea of meta-programming is to be able to create executable code from within your application that can get eval’d at runtime. While this is currently possible doing things like Reflection.Emit, it is very difficult and painful at best with the current C# implementation. To make these very dynamic meta-programming scenarios possible, Microsoft is currently rewriting the C# compiler in Managed code. This will allow the compiler itself to become a service.

By rewriting the complier itself from the very blackbox implementation written in C++ to one written in managed code, will allow the compiler to be much more open. This will allow us to create applications that use meta programming and/or code generation in a way where we can actively participate in the compilation process. Imagine being able to dynamically create rules-based code that can be compiled and evaluated at runtime. This is sort of analogous to generating SQL programmatically and running it.

This would also expose an object model for C# source code itself. The possibilities that this will enable will be quite profound.

The Future of C# at the PDC

While at the PDC08, I had the pleasure of attending the session “The Future of C#” given by C# author and architect, Anders Hejlsberg, himself. It was a great experience to hear about the future of this language from its founder. In this session Anders goes thru a brief history of C# and where this evolution is heading with C# 4.0. He does a great job at explaining and demoing these new features. I highly recommend it.

The Future of C# presenter: Anders Hejlsberg

F# – Functional Programming for the Masses….

Functional programming has been around for quite some time. Many functional languages predate the languages we use today. In fact Lisp was created in the late 50’s.

Functional programming is based on lambda calculus which is a branch of mathematics that describes functions and their evaluations (see wikipedia). Pure functional languages are built from mathematical functions and do not allow for any mutable state or side effects. This means that no state can be modified and it cannot have IO. Because of this, until recently functional programming languages and concepts were primarily the domain of mathematicians and scientists doing theoretical work.

So, why the new interest in functional programming?

Some of this is due to functional programming concepts creeping into main stream languages such as C#. With .NET 3.5, came LINQ (Language Integrated Query) which is firmly rooted in the functional programming paradigm. LINQ essentially builds an expressions tree used to describe a query operation. This operation is then evaluated “Lazily” at the moment it is needed. This idea of lazy evaluation is also a core concept in functional programming language.

So why is functional programming important?

While Moore’s law (which is the doubling of the number of transistors that can fit on an IC every two years) continues to be true, we are not seeing this same trend in clock speeds which have traditionally given us the performance boosts that we have seen over the years. With the advent of multi-core processors, the future shows us that to increase performance, instead of clock speeds increasing (which has a physical limitation that is due to the speed of light), we will see an exponential growth in the number of processor cores. Currently, two and four cores are popular but soon we will see 8, 16, 32 and more cores.

To take advantage of all of these cores, multithreaded programming will become increasingly more and more important as a way to exploit the new multi-core processors. We all know that multi-threaded programming is very difficult at best. With mutable state and multi-threaded programming, the possibility of encountering race conditions, dead locks and corrupted data goes up exponentially with the number of cores causing a much greater possibility of producing “Heisen-bug” (which are bugs that are very difficult to debug because the behavior is changed by the act of debugging).

Because Functional programming does not use mutable state, it is very suitable for being able to easily and safely handle computationally expensive processing across many, many cores in parallel,

Enter, Microsoft F#

Microsoft has recently introduced a new, mostly, Functional programming language built on top of the .NET CLR. This new language, called F#, came out of the Microsoft Research group and is in the process of being productized so that it can be included in a future release of Visual Studio.

F# at the PDC 2008

At the PDC08, Luca Bolognese, the product manager for F#, gave an excellent presentation on F#. In his talk he created a program in F# that performed various financial calculations on stock prices that were downloaded from the internet. What was really interesting was how he went about writing the program. Typically, when writing programs using imperative languages like C#, we tend to take a top down approach. Sure, we break our programs into methods but we think more or less in a procedural fashion. With functional languages, as was the case in this presentation, the solution is often composed from the inside out, much like you would compose a complex SQL statement or an XSL transform. With SQL or XSL, you often start in the middle by starting with a basic function then running it to check the results. Then you slowly filter and modify the results to slowly build out the desired solution. Because of this, when you look at the final result, it can look complex and you might often scratch your head when you come back to it at a later date.

This inside-out composition technique is exactly how Luca built his solution. Slowly getting to the final desired result. The amazing thing was how little code it took to reach his end result compared to what would have been necessary if it were written in C#. Much of the “noise” was removed by the F# solution and in the end it really described the problem at hand. What really helped this approach is that F# uses type inference so you don’t need to declare your many of your types, this made the composition process of creating the program much easier. This is not to imply that F# is not statically typed, it is NOT dynamic language. In fact, functions are types in F#.

Another technique he showed was currying. This is basically piping the results of one function into the input of another. This made the code very readable and gave a clear understanding of what was being accomplished.

After creating the program, Luca showed how simple it was to parallelize his solution to run across multiple processors. To do this, he needed to make only slight modifications to the code but he didn’t need to add any locks, monitors, wait handles or any of the other constructs we normally associate with multithreaded programming. In fact, his solution would safely run against as many processors as could be contained on a chip. Amazing…doing this in an imperative language like C# would scare the most seasoned developer.

Since F# is built upon the CLR, it is a functional language that can interact with both the rich class library of .NET framework as well as with other assemblies written in other .NET languages. This opens up lots of interesting scenarios and allows us the possibility of writing computationally expense algorithms in F# which provide a safe story for parallelization.

Here are links to Luca Bolognese’s presentation of F# at the PDC 2008. I highly recommend it. Luca is presentation style is both very informative as it is entertaining. The bulk of his presentation was done as a demo which should please the developer in all of us.

An Introduction to Microsoft F# presenter: Luca Bolognese

Other resources for F#

Oslo – It’s a Floor Wax and a Dessert Topping…

At this year’s PDC, Microsoft has finally unveiled a first look at their new modeling platform, code-named “Oslo”.

The first question that comes to mind before we can talk about Oslo is “What is modeling?”

Modeling in software development is nothing new. In fact it has been attempted in several forms before. In the past, software modeling was done with CASE tools that attempted to turn UML type graphical structures into code. In general, these tools were very expensive and for the most part were tied to specific software development paradigms created by the thought leaders of that time (i.e. Booch, Rumbaugh, Jacobson, etc.) One of the more popular tools, Rational Rose, allowed users to create UML diagrams which could then be used to generate code.

While all of these various modeling technologies captured the imaginations of developers at that time, they really failed to deliver on the promise of making the software development process more manageable and understandable. I remember trying out Rational Rose and bumping up against the following issues:

- The impedance mismatch between general software modeling language like UML and the underlying software languages of that time (i.e. C and C++). It was sometimes difficult to express in UML specific C++ constructs and vise versa..

- The graphical nature of the modeling along with the monitor sizes of that time made it difficult to get a good view of complex software solutions.

- Also, software, in general, was much more tightly coupled in those days making it more difficult to model as well.

While I’m sure there are success stories that can be cited from this style of modeling, it hasn’t been part of what I would call mainstream development. These tools were just too difficult and expensive making it questionable whether they provided any real advantage.

When I originally was exposed to these tools, I was writing shrink-wrapped software that was written for a single PC. Since this time software development has gotten much more complex. Throughout the history of software development there have been new abstractions introduced to make things easier so that we could produce more robust software solutions. For example:

- Assembly Language was introduced to make machine code easier

- C made it easier to create more complex solutions that were difficult in assembly

- SQL provided a common language that could operate on top of structured data

- C++/SmallTalk and other Object Oriented languages helped reduce complexity by allowing encapsulation into objects

- COM/DCOM/CORBA was introduced to make it easier to break software into reusable components

- NET/Java and other runtime languages provided an abstraction on top of the underlying operating system

- XAML provides a more declarative language abstraction that describes what is desired rather than the imperative steps to accomplish the outcome

Often these technologies were received with mixed feelings. Some could see their potential value while others talked about the loss of control and performance that the abstraction would bring. But with many of these, the increase in computing power and the new solutions that were made possible justified the move to greater and greater abstraction.

I know that speaking from my own personal experience; the software development process has gotten more and more complex especially with regard to large enterprise solutions which have almost become unmanageable. Now with SOA-type architectures we no longer have systems that are deployed and run in a monolithic fashion. Instead these systems are an interconnecting maze of services, not all of which are under your direct control. Systems have gotten very difficult to maintain and configure. It also seems that software has a much longer lifetime making it very difficult to understand its intent especially as new developers come on broad and the experienced developers that originally authored the code leave.

Microsoft realizes that this complexity is just going to increase as we look forward to the future where some software will be deployed both on-premises and in the “Cloud” as well as to many other devices. These future solutions and experiences will be very difficult to create and manage using the tools we have today. Raising the level of abstraction to the next level will be needed, which is what Microsoft is attempting to do with the introduction of “Oslo” at PDC08.

Oslo is quite an expansive offering from Microsoft that brings Modeling into the forefront. Instead of just modeling the software itself, Oslo has the much broader and ambitious goal of modeling the entire software development lifecycle, from inception and business analysis to design and implementation to deployment and maintenance. With Oslo, these models are living and breathing. They not only organize and support the development process, they are actually used to run the software and systems using runtimes that consume these models.

During the PDC08, Chris Anderson and Giovanni Della-Libera quipped that Oslo was a “…dessert topping and a floor wax…” (click here for SNL reference). This overarching view where Oslo is fully integrated into the software development process is what makes this project so ambitious.

In short, Oslo is composed of three primary building blocks:

- Repository: In Oslo all of the modeling data is stored in a Sql Server repository. This data contains all of the entities being modeled. This modeling data is comprised of such things as: software entities, workflows, business analysis, hardware, stake holders, etc.

- Modeling Language (“M”): Oslo comes with a new modeling language called “M”. This language is has several dialects:

- M-Schema : This dialect is essentially a Domain Specific Language (DSL) for modeling storage, more specifically database storage. Using this DSL, one can easily create a database schema.

- M-Graph : This dialect is a DSL for adding data to a database. This DSL enables a very simple way to populate a database.

- M-Grammer : This is the most interesting of the three because it is essentially a DSL for creating DSLs. Creating languages is very complex and previously left for the realm of researchers. Using M-Grammer makes it much easier to create textual Domain Specific Languages that allow users a way to code in specific domains.

- Modeling Tool “Quadrant” : To be able to consume, browse, create and change the vast amount of modeling data associated with an enterprise software solution, it was necessary for Microsoft to create a tool that would make this feasible, this tool is code-named “Quadrant”. “Quandrant” is essentially a graphical front end to the Oslo Repository.

Microsoft showed off all three of these components of Oslo at the PDC in the various presentations and keynotes. It was very obvious that we were seeing the very beginnings of the last few years of effort. Many of the demos that were shown were fairly simplistic making it clear that there is still a considerable amount of work to do before Oslo is ready for prime time.

At the PDC there were five sessions devoted to Oslo. These were all very informative and very well attended. I was able to attend many of these live and the rest I have watched online. Here are the links along with a quick summary of each.

A Lap around “Oslo”

Presenters: Douglas Purdy, Vijaye Raji

This presentation was a great introduction to Oslo touching on all of its components. If you have time to watch only one of these sessions, I would recommend this one as it gives you a general feel for the potential of this new modeling platform.

“Oslo”: The Language

Presenters: Don Box, David Langworthy

This presentation discussed the details of the “M” language itself. They showed how this language worked to generate schemas, data and briefly touched on creating DSLs. They showed the “Intellipad” tool that was used to edit and create “M”. I’m sure that this Intellipad functionality will at some point be integrated directly into Visual Studio.

“Oslo”: Building Textual DSLs

Presenters: Chris Anderson, Giovanni Della-Libera

This was a very interesting presentation that showed how to create new textual domain specific languages (DSLs) using “M”. Language creation can be a very daunting task. I’m sure anyone that that studied computer science in college will remember how difficult it was to use LEX and YACC to create a language. “M-Grammer”, which is what this session discusses, makes it very easy to create DSLs. I believe that DSLs will be more and more prevalent in the future and will enable applications to be created quicker and more reliable. Many of us deal with DSLs in our day to day work already. These common DSLs include XSL, (a language for transforming XML), SQL (a language for querying data), HTML (a language for creating web content), etc.

I highly recommend this presentation. To get the most out of it, you may want to watch the two previous ones I mentioned before watching this one.

“Oslo”: Customizing and Extending the Visual Design

Presenters: Don Box, Florian Voss

This presentation drilled into the “Quadrant” tool used to explore the models stored within an Oslo repository. While it was obvious that this tool is a work in progress and still has quite a ways to go to achieve its goals, you can get an idea about how this tool makes it easy to view the enterprise in a very easy and ad-hoc way. This session goes into the extensibility of the “Quadrant” tool and how it can be tailored to fit very different types of users.

“Oslo”: Repository and Models

Presenters: Chris Sells, Martin Gudgin

This presentation gets into the repository itself. The repository is built on top of SQL server and provides secure access to the model data stored there. This presentation touches on the many models (written in “M”) that are included with the repository. These existing models are used to model things like: Identity, Applications, Transactions, Workflow, Hosting, Security, and Messaging (just to name a few). Chris Sells goes into showing how models are compiled and loaded into the repository and well as how to access model data from the repository. In addition to this he also covers the core services provided by the repository, which are: Deployment, Catalog, Security and Versioning.

Other interesting posts on Oslo:

In addition to these videos, here are some other interesting articles that I have stumbled across on Oslo:

PDC08, from my perspective…

The PDC was a great conference showing much of Microsoft’s future vision for their products and platforms.

I would say that this conference could be broken up into several major areas of interest:

- Oslo

- Azure Cloud Operating System

- Live Services

- User Interface – Silverlight

- Languages – C#, Dynamic Languages, F#

- Windows 7

Overall, I was very impressed with Microsoft’s ability as a company to coordinate the efforts of many diverse groups and technologies throughout the company. It seems that many of the efforts and initiatives that Microsoft has undertaken are starting to come together into a common cohesive vision. That said, it was also evident to me that they still have a bit of work to do to bring all of this new technology together for prime time usage. Although the PDC is an event that usually occurs only every few years, I did hear a rumor that Microsoft has already announced a PDC09. This makes me believe that the timing of this PDC may have been a little aggressive. For many of the Azure and Live services sessions the presenter was in constant IM contact with the Microsoft datacenter. That tells me that there were lots of stability concerns regarding the products that had strong reliance on their cloud services.

My personal interests drove me to many of the sessions on their cloud-based services and Oslo. It was pretty well know that Microsoft would be announcing a cloud-based platform and Live Mesh client-side platform but many of the details were clouded in secrecy before the PDC. Oslo was also talked about a little before the PDC and was shown in a little more detail at the PDC.

Over the last several years Microsoft has been building datacenters at a record pace. During the 1st keynote address, Ray Ozzie talked about how Microsoft realized that in building up their own web-based internet properties that many of these activities were being undertaken by many large and smaller companies around the world. While many large companies could afford to build large datacenters that provide reliability, redundancy, fault tolerance, etc. Many not so big companies were struggling with this overwhelming task. Even very large companies were having trouble with scaling out their services to handle geo-location and fault tolerance. It was becoming clear to Microsoft that cloud based services to complement on premises software was needed. In his keynote, Ozzie recognized similar efforts by both Google and Amazon in this area.

With Windows Azure, Microsoft differentiates itself with cloud based services offered by both Google and Microsoft by offering a service that is:

- Abstracted out to be completely elastic, by allowing computing power to by dynamically sized and scaled at runtime so that you can handle peak loads without paying for more than you need.

- Geo-location, by being able to spread a cloud-based deployment across the globe.

- Fully fault tolerant. All data and software in the cloud is located in several places in the cloud and always spread between servers at different locations.

- An infrastructure that can easily be connected to on-premises software through an internet service bus.

- And the list goes on…

With Windows Azure will come many interesting deployment tools that will make deploying and managing applications in the cloud easy. Microsoft has created many new and interesting technologies to create what they call “Fabric”. This “Fabric” is the abstraction that sits on top of the actual servers that are running inside their many datacenters. You can think of this “Fabric” as an abstraction that is a few levels higher than virtual machines.

From all of the various sessions on Azure and cloud-based services it was very clear that the new world of cloud-based software was going to require us to think differently about how we architect, design and implement our software so that it is better suited to fit into a cloud-based paradigm which, in my opinion, is where things will be headed in the next ten years. Many of these practices involve the proper decoupling of software as well as other practices that are just part of good software design.

As part of this new cloud-based initiative, it is clear that WCF and WF (Windows Workflow) are going to be two very fundamental enabling technologies. Up to the point, we haven’t seen too much usage of Windows Workflow but it is clear that this will be a large part of many cloud-based applications. As part of this, Microsoft announced a new server product called “Dublin” which is an application server built using WCF services that front Windows Workflows. This server product has many advances in Windows Workflow that make it an ideal choice for hosting workflow in the cloud.

There were also talks given on the new data technologies that expand Sql Server into the cloud. These were dubbed “Sql Services”. One of the new services is Sql Server Data Service. This service provides a new way to retrieve data over the internet using REST-based protocols. These new REST protocols provide ways to query and update data using standard HTTP verbs, such as Get, Post, Put and Delete. These protocols are built on the idea that specific data resources are uniquely addressable using URIs. REST based protocols are built to scale like the internet itself. It was clear that in addition to SOAP based protocols, Microsoft was heavily investing in REST-based protocols as well.

I also attended a session on storing scalable data in the cloud. From what I could gather, one will need to rethink how data is organized in order to take full advantage over the scalability that the cloud offers What was described was very close to what Amazon does with it’s data storage services. Basically, there are three storage items in cloud based storage services: blobs, tables and queues. From what I could understand, the table storage was pretty basic. Much of the storage seemed to center around entities which play nicely into Microsoft’s Entity Framework (which was just released with .NET 3.5 service pack 1). They alluded to providing more relational storage in then cloud in the future but none of the cloud storage options had any relational capabilities. I would imagine that much of this is because they want to provide a massively scalable and reliable data platform and the relational aspects would make this task much more difficult (which is the same route that Amazon has appeared to take). Not sure where relational cloud storage would fit it but I would think that they would need to have a story here.

In addition to recognizing how difficult it is for companies to write highly scalable software and the need for cloud-based computing, Microsoft has put a considerable investment into “Oslo” which is a software modeling technology. In recent years, software has become more and more complex. It is also recognized that there are many aspects of software development that needs to be coordinated. These aspects include business analysis, software architecture, software design and implementation, software deployment. etc.

Over the past 20 years, there have been many attempts to model the software development process. Many of these attempts, like Rational Rose, have had very limited success. With “Oslo” Microsoft has taken software modeling to the extreme. This appears to be one of Microsoft’s most ambitious projects in recent years. In “Oslo” all of the modeling is stored in a database repository. On top of this repository there is a highly customizable user interface that is used to explore this repository. The user interface is very interesting and provides very in depth views into a given software application and how it connects to other various applications and services.